DB Amorin [00:00:05]

Cool. Well here we are. Welcome! This is our third conversation in our series. We’re convening today with our first guest, Emily Martinez from Los Angeles, California. and we’re going to be talking today primarily about AI and Machine Learning, amongst other of these other texts that weave in and out. The kind of container that we’ve built for the conversation is “Acceleration and Slowness” just thinking about the turnover from Web 2.0 to Web 3.0 and the kind of role that the algorithm itself has played in that and the roles in which those texts illuminate these darker areas of technology and areas where things are obfuscated or not as clear and how we can wrap our heads around where all this is going, using this technology. So I guess we should re-introduce ourselves for people who are tuning in for the first time. Maybe Ralph could start with a brief introduction?

Ralph Pugay [00:01:15]

Yeah! Hi Emily, my name is Ralph, I’m a Gemini, and I’m an artist and educator. I grew up in the Philippines, but have mainly been making work in the form of paintings, drawings, and socially-engaged projects. But usually has to do with concepts of, like, how do you translate trauma to an audience, but also how do you think about optimization within a practice of art in relation to the social… I dabble in so many different things. I’m kind of ADD in that way so I go from one subject to another.

Emily Martinez [00:02:06]

I can relate.

Tabitha Nikolai [00:02:10]

Cool, well I can jump in. Emily, thank you for being here. I’m so excited to talk to you. My name is  Tabitha Nikolai. I’m a new media visual artist, and teacher. I do installations, sometimes performance, always kind of using video games as a touchstone or predominantly video games as a way of exploring fantasy selves, pocket spaces ways of reflecting on this reality through pocket realities, investigating online subcultures or, the manifest… like the social implications of online technologies, stuff like that. Yeah, I’m excited to have you here!

Tabitha Nikolai. I’m a new media visual artist, and teacher. I do installations, sometimes performance, always kind of using video games as a touchstone or predominantly video games as a way of exploring fantasy selves, pocket spaces ways of reflecting on this reality through pocket realities, investigating online subcultures or, the manifest… like the social implications of online technologies, stuff like that. Yeah, I’m excited to have you here!

DB Amorin [00:02:57]

DB Amorin [00:02:57]

And I’m DB Amorin, I primarily work in video and sound, investigating the role in which error plays as a way to investigate identity, or investigate the ways in which we deviate from preconceived notions of ourselves and our world around us. A lot of my work takes the form of installations or videos and media that self-destruct over time, and teasing apart the gaps between function and dysfunction, and how they can relate to larger worldview and perspectives.

Emily and I had met in LA at a panel that we are both part of at UCLA, the NEA’s Tech As Art Field Scan. And I was so excited when we came upon this project to reach out and talk to you, get you as part of this project and talk with us about your practice and the ways in which it intersects with AI and machine learning, and how you use those technologies to tear itself apart and reform it in a way that you find is beneficial to you and your community. So, welcome! Thank you for visiting us, thanks for talking with us about your practice. We’d love to hear more from you.

Emily Martinez [00:04:25]

Thank you all. I’m so happy to be here and so happy you all reached out to chat. These are the kind of  the moments I live for, no joke. To give a brief introduction: I’m a Cancer, Scorpio, Scorpio, born in Cuba, first generation immigrant to Miami, which is where I grew up in Florida. I’ve been in California now for 11 years. In LA since 2012. So this has been the last nine years of my life. And this is where I started my journey into digital arts / new media. This was like the whole journey over to where this conversation is happening.

the moments I live for, no joke. To give a brief introduction: I’m a Cancer, Scorpio, Scorpio, born in Cuba, first generation immigrant to Miami, which is where I grew up in Florida. I’ve been in California now for 11 years. In LA since 2012. So this has been the last nine years of my life. And this is where I started my journey into digital arts / new media. This was like the whole journey over to where this conversation is happening.

I started working with machine learning in 2018 with a project called Queer AI which is a collaboration with Ben Lerchin, and we created this chat bot that was trained on queer theater. And then last year I started working on a beginner’s guide for Machine Learning, and that was facilitated by the Processing Foundation. I had a fellowship through them working with ml5js, which is their Machine Learning Library for education and starting [out], beginner, easy access stuff.

I still haven’t finished that. But the the core of that was focused on text-generating bots and also centering the needs of communities that were working with their own text with archives and with what other folks have called “small data,” which is a term that I’ve heard Stephanie Dinkins use and Allison Parish to point out the opposite of big data which is what a lot of this tech requires, or so we’re told. And then within that context of unsupervised machine learning for text generation, I’ve been thinking a lot about… not so much about how AI is used for prediction which, yes, that informs things… But as far as how I’m working I’m trying to think about this tension between a poetic of how to suss something else out of that, and how to use these machine learning, bots as tools for mirroring and self reflection. I think they are things that mirror back to us things about the underlying culture and the structure, and everything else, good or bad. Most recently, I started to explore image generating stuff, and multimodal AI. So working with the VQGAN+Clip which we might talk about at some lengths or not.

So that’s part of my practice most recently. I wanted to say something else… oh yeah! This is also not my day job. I have this other world, this other life, right. I still use technology. I make websites and do stuff in marketing. So it’s something where when I get to have a conversation like this and I get to engage with folks thinking about this in a way that we are, that is the gift. This is why I do this. And there can be long periods of time where I forget who I am. I just watched the new Matrix and I’m like, “Oh, this is the metaphor for everything. I’ve been in the Matrix. And these people just plucked me out and now I can remember who I am.” (laughs)

This is also not my day job. I have this other world, this other life, right. I still use technology. I make websites and do stuff in marketing. So it’s something where when I get to have a conversation like this and I get to engage with folks thinking about this in a way that we are, that is the gift. This is why I do this. And there can be long periods of time where I forget who I am. I just watched the new Matrix and I’m like, “Oh, this is the metaphor for everything. I’ve been in the Matrix. And these people just plucked me out and now I can remember who I am.” (laughs)

RP [00:08:03]

Was it good?

EM [00:08:10]

Um, I have mixed feelings about it. I’m not sure. I have to watch it again. I think there’s some interesting things that they tried to play with. Some of the story is interesting… but I don’t know. Have any of you watched it?

DB [00:08:24]

I attempted and I was thrown off by how self referential it was and was like, “I can’t right now.”

EM [00:08:36]

Yeah, Yeah, yeah, I feel you.

TN [00:08:41]

Maybe if I can ask a question that kind of riffs on that. I think part of our intentionality with this project is to rupture that bubble around, I don’t know, enhancing people’s tech literacy. Maybe could you talk a little bit about like, you talked about a turning point just now for yourself where you started to learn about these things? What was your process like for that personally, and then what made you not only want to do that for yourself but also generate these learning materials to help get other people free with that?

EM [09:15]

Wait, is this a question for me? Yeah, okay. Sorry, I was just like…

TN [00:09:14]

Sorry, I asked you in a weird way. I can rephrase it if I need to.

In your intro I heard you talk about maybe a revolutionary moment, maybe when you moved to LA or had been there for a while, where you started to get more into tech and maybe self-educating is what I understood about that? And so knowing that one of the goals we have with this project is these systems are so opaque to so many people, but increasingly dominating people’s narratives. How did you get free of that and then what made you decide to take that into a pedagogical practice?

EM [00:09:57]

So going back, when I moved to California actually went to Santa Cruz. I did a Masters there at UC Santa Cruz, the Digital Arts and New Media one, so that was my deep dive into theory and really for the first time engaging with tech in a critical way, and making art and being really excited about it. Then moving here, I came here with no skills, you know? “Wow, I learned theory and I can make better art but how do I survive?” So when I moved to LA is when I started to learn, I started to teach myself front-end development stuff. I can build websites and make money and make art and whatever.

how do I survive?” So when I moved to LA is when I started to learn, I started to teach myself front-end development stuff. I can build websites and make money and make art and whatever.

I was learning on my own just doing these tutorials, but then also going out into the world and going to meetups and going to try to find community, and going to tech events, and just growing increasingly more enraged and disillusioned with even the ones that were billing themselves… I think at the time the narrative was about “women.” “Women in tech” was the big thing. And we still hadn’t even moved to diversity and inclusion like that, that language wasn’t even there yet. They were just slowly tiptoeing… Well, they discovered women. This is 2010. And it was awful. You know I’d go to ones that were supposedly about education, and everything was just about how to monetize learning and how to extract more value from people so we can ruin education for the masses on these tech platforms.

So it was something that I think over time trying to find community, seeing what’s out there and then, in the art world, things are also very (at least here) seem to be not… I wasn’t finding community, you know? Like the community where people were having this kind of conversation was still on the internet, really. And they were scattered all over the place, like certain cities, or certain university departments, whatever. Wherever that happened, to me it was not happening in LA.

I’m trying to think of other things that were going on. Oh! The sharing economy. That’s also the time period where that starts to, you know, 2014, 2015 that language starts emerging. I actually worked with a collaborator; we did a whole body of work around that whole thing. And how they’re using this language and these ideas that have to do with community, sharing, collaboration to subsume them into capitalist, extractive, exploitative, whatever, practices. So there’s a lot of exploring I did there, and by the time I got to the machine learning AI stuff, It was just…I think one of you said that you jump around and, you know, you’re kind of ADD about [areas off your practice], and I’m the same where there’s this new technology. It’s not really new, but for me we’re living in this networked age were software runs everything. Part of my going to grad school and studying this the way that I did is because I felt like as an artist this is gonna influence our lives no matter what. And I want to understand this world that we’re in. And I want to be able to engage in a critical art practice that has an awareness of how these software systems, how these algorithms, how all this technology influences, everything.

So coming back to being here and then the move towards AI, I was just curious. There’s something really fun about like, “Oh my god, it generates text? Sign me up, I want to do this.” And then realizing I don’t know how to do this. (laughs) I don’t have the tech skills. It’s still like 2017. My friend Ben Lerchin, who’s my collaborator with Queer AI, Ben is a software engineer and had more of the tech savvy. So the first project we worked on Ben did all the coding and I wrote a manifesto and I had this whole vision for what I would ideally want. It’s stated in the manifesto to some extent, there’s a flat-out rejection of the rapey robot sex trope of what a lot of these chat bots are. If we’re talking about a “queer AI,” we’re trying to think about what does that mean? What does that mean in terms of how we relate to each other as people? In terms of desire, in terms of bodies. Are we embodied? All of the ways. I was looking at sexting bots, you know? And everything was the femme-bot fantasy. The subservient femme-bot. And we’re like, “No, we’re making the opposite of this.” There’s something that fucks with this.

EM [00:15:36]

Then it was last year when I wrote my application for this ml5 fellowship. The tech is now getting to a place where I might be able to build something myself. And if I can do that I want to teach other folks how to do that. And I think for me engaging with it creatively is a way, because if I just engage with this stuff intellectually it’s really depressing, right? When we think about how this stuff is used already in the world: to surveil, to extract, to exploit, to keep accelerating the way power just keeps holding. You know, it’s just not fun. And those conversations are being had and they’re super important but my way of engaging it’s like, “No, I want to do something with this that is going to be surprising and maybe depressing.” But also hopefully there’s something that can come forth that feels like…. Is there something here that we can rescue that can be generative and can be actually beneficial? And at the very least, the goal is to be able to have broader conversations with people and bring a greater understanding to what are increasingly more and more complex and intertwined systems.

Does that answer the question?

(laughs)

TN [00:17:18]

So much, so much. So much hooks to jump onto there. Yeah, absolutely.

RP [00:17:21]

Yeah, a lot of questions that are popping up in my head, particularly around dissemination of information. Because part of your work is about education and thinking about these emerging technologies that I always think about in relation to education as, okay these things are tools right? So we can talk about democratizing these tools so that everyone has access to them. But I’m thinking a lot about ethics and the way that we disseminate ethics, along with the learning of these technologies. I’m just wondering how you’re thinking about that? Or if you are? I mean, I’m not I’m not implying that you are. Because I’m curious, generally. Because my question all the time is if these technologies are extractive, and we’re starting off from there then it’s like we’re kind of skipping something right?

@sineadbovell #facialrecognition #technology #ai #futurist #greenscreen #greenscreenvideo

EM [00:18:34]

Right, right. Yeah, that’s a really good question.

I think part of the education piece for me is I do a lot of reading, a lot of learning. Part of this toolkit thing I wanted to make with the yet-to-be finished ML5 project was to point out people are already doing the research and setting up frameworks. There’s already so many ethical frameworks. It’s not that these things don’t exist, they’re just not being used broadly, right? Like they are being used within certain communities. So, for example the researcher at Google that was fired, Timnit Gebru. Are you familiar? That was somebody whose job was to research the language of the giant generative language models and some of the AI tools that Google was using, and then report back on what bias, what problems do these things have. And literally for doing their job they are fired. Most recently, I read that they’re starting their own independent research center which I haven’t looked into too deeply. But it’s something I think about, where I’m like, how do we counter all of this stuff? We need more independent research, period. Because the tech companies are not going to do it. And then when they employ someone to do it, they don’t really want them to do it because when that person literally does their job, they’re removed.

@professorcasey Some of you asked what I thought of Google firing Timnit Gebru. #techtok

So that’s part of the education piece, okay, let’s figure out all the layers of what’s going on. And then folks doing work either within different education communities or on their own. I was just listening to an interview with – I have some notes here – with Jason Edward Lewis, Indigenous AI and that whole protocol, if you are familiar with that at all? Well, I haven’t dug too deep, but they’re working with indigenous communities and trying to build capacity within their own communities to build the systems themselves. In response to Silicon Valley [being] all about scale, and that scale erases difference, and we’re not okay with that. There they talked about having some of these tech companies try to parachute in and again extract knowledge. Like, “Oh, we’re going to build something with your indigenous knowledge base,” and the response is, “No you’re not, we’re going to do this ourselves.” And Jason, in the discussion that I heard him having, was about not breaking with Western technology, but taking advantage of these mass-produced technologies that we can deconstruct and bend and try to have do something else.

00:22:02.000 –> 00:22:10.000

And the question is how do we do it, and then doing it intentionally within the community that it’s meant to serve. And then the question of time and scale comes up as well. Because you can’t do this stuff fast and Silicon Valley wants to do everything fast. So working with language models, which is the thing that I’ve done the most with. I’m looking at what some of those data sets include is they make it impossible for you to see what’s in the data set because they don’t annotate anything. That was part of one of the recommendations i think Timnit Gebru made to Google was, “Hey, why don’t we actually annotate this and keep some kind of record of what’s in the data set?” and they were like, “No, we don’t actually want that information out in the world.” Huh? Why? But it’s because so much of that information is inherently biased. When we think about where do we get large data sets, who has all of this stuff?

It’s always the same. It’s Western colonial libraries built on Enlightenment. Whatever, however you want to paint it, it’s always going to be who has the most of what, the people with the most power and the people who control the narrative. And that’s going to be totally skewed. I think I’ve gone off a little bit. But what was the original question? I’ll try to bring it back. Around ethics? Oh yeah.

RP [00:23:40]

I’m thinking about it particularly in terms of ethics always requires maintenance work. But technology doesn’t. Technology is trying to maintain power, right?

The top “non-profit” suicide helpline is selling data to a company that is using the data to build and sell an AI customer service software. 🤯https://t.co/BBHPgSoIhj@politico #Ethics #Tech #AI #MentalHealth #Therapy #Helpline

— psypack (@psypack) January 30, 2022

Through different mechanisms thinking about tech or AI or something like that being the carrier of something that requires maintenance work, when the way that we think about technologies is a product. Right? There’s a product over here, a product over here and a product over here. They’re always trying to evolve at a fast scale. I feel like there’s a dissonance in my brain around the ways we counteract these things given that the tools are actually not meant to be used in that way.

EM [00:24:35]

They’re not. And their whole ethos is “fail fast, break things” or whatever, right? That’s their whole thing. And it’s the opposite. No, we need to do the opposite. We need to take care. I think we need to go slow. There needs to be a lot of checking-in with actual people. And those are all the things that are not being done that I’m constantly being told are impossible to do. Because when I’ve even brought up the idea of… looking at the way in my personal practice I’m engaging with this technology, I kind of broke it down, there are three ways that I can do this, and there’s probably a million more but just for the sake of the conversation, there is one way where I don’t use the already pre-trained giant language models that make beautiful prose, but again it’s the corpus of whatever trash is underneath, and I just flat out reject that and I can use a clunkier older algorithm. But I can train it with my own text and it’s self contained and self referential. And there’s a way that works; It allows you to then be able to study the outputs more you understand what’s coming up because you know what went in. And that’s one way of working with this stuff. Then there’s the way where I’m working with it now, which is like, I’m going to use a giant language, you know generated, crazy-making. I’m going to train over it, and then I’m going to study that output. And it’s going to teach me something about this weird mashup of what is mostly still that GPT-whatever thing. Or now I’m generating images. What went into that and what’s happening now, even as I tried to steer it. But what is it doing? And then the thing I’m putting in the third box, which is kind of the dream is: can we make our own? Is there a way that we can make our own giant language models. And then whenever I mentioned this to most people. It’s a straight up, “oh no that’s impossible, you just need too much data and there’s not enough data.” I think there could be.

#SocialMedia #addiction #MentalHealthAwareness #MentalHealthMatters pic.twitter.com/W0Ziccbdk4

— Sophie Fauchier (@sophiefauchier) January 17, 2022

It’s just a matter of how long will it take. And how do we build it? Because I just have to reject that answer because there was enough data to make the other ones that just came from people and cultures and sources that I’m not interested in, Right? I mean, maybe to some extent, yes, we can. I don’t know. There’s certain things like maybe just some basic encyclopedia definitions or things to just base off of that can be somewhat neutral. Again, you can contest that because it depends who made all this stuff. But being faced with the “no, that’s impossible. This is the only way to do things, and we need all this data and we need it now, and it can only come from these giant companies.” It also costs a ton of money to train one of these things. I think GPT-3, I was reading, to do the last final training, It was something in the millions of dollars, and I don’t remember if It was 2 million or 12 million. I might have added an extra 10 million to that, but whatever it is, it’s a lot of money. So it’s money, it’s time, there’s so many factors there.

But what was it again, I lost the question. I jumped off.

DB [00:28:15]

I’m piggybacking off something you just said. Something that comes to mind is one of, and I can’t remember which of the readings it was, but it was when we’re thinking about what value Google has, it was determined that Google would not have the amount of value that it has if it weren’t for Wikipedia being the top search result. And of course, Wikipedia is sourced from data that the community creates, and it’s a completely scrappy nonprofit. The general consensus is that the valuation of Google comes from the strength of its algorithm, but the strength of the algorithm would be nothing if it weren’t for all this data that we have collectively put into the top search result, which is Wikipedia.

So when you describe ways that we can counteract these large data sets of these multinational corporations that have control over all this data and it being quote unquote impossible, I guess it just comes to what is a community like, who is the community you’re asking, what are they willing to put forward in terms of labor? And another portion of… I think it was Jaron Lanier in the “AI is an Ideology” reading that we had passed around was mentioning that a way to subvert the current structure of these data systems is to start to, rather than feed into this idea that these nation states like us and China that are battling over who can create the most dominant weaponized A.I. systems, rather than have some kind of a Cold War situation is to instead value the labor that we are all contributing as consumers that we don’t really get factored into in the end. I love a part of your bio that says “the tactical misuse of technology.” And it’s interesting to think of misuse of technology as just an “intentional use of technology” falls under the category of misuse. And intentionally creating communities that are incentivized in a meaningful way to create data sets for us, for our communities that make sense. I’m really drawn to this idea of an ethical data set which you had mentioned you were trying to outline in the ML5 project that you’re creating. And I just wanted to ask a little bit about how it’s a creative endeavor, but I think it definitely does… If we’re using this definition of a tactical misuse of information or a misuse of technology, how you have used these queer data sets that you had defined how that stands in contrast directly to these other kind of larger data sets? And if you could speak to that a little bit in terms of the queer A.I. project?

EM [00:31:45]

OK, I still wanted to jump back a little bit.

DB [00:31:48]

Oh go ahead!

EM [00:31:55]

The article you referenced, the “AI Is An Ideology,” which I was like, Oh my God, that’s a whole other tangent of a million things that we can open up because even the term “AI,” it’s just marketing, right? Like there is no intelligence. Or it’s a very narrowly defined intelligence. Most recently, it just has to do with finding patterns in big data. I think that’s the most recent incarnation of what this so-called “intelligence” is. But the way it’s used to drive certain narratives and then create whatever, that’s just something that I’m I won’t say much more than that right now, but it’s something…

RP [00:32:35]

But why not?!

EM [00:32:37]

Oh, well, I want to try to answer the question! I think I might still just go on another tangent, and then come back because the next thing I thought of when I was reading this about the arms race. We’ve heard Putin say, I forget what the quote is, but “whoever controls AI controls the world.” This is a serious thing that’s happening right now among the nation states and most recently, GPT-3, which is the bigger language models. And I don’t know if I should say a little bit about these GPT models in general just to give folks that are listening, they’re like, “What are they talking about?”

TN [00:33:29]

That would be great. We’ll put definitions in, but any definition you want to add is good too.

EM [00:33:36]

So GPT models, I think the acronym is “generative pre-trained.” They’re these text models that OpenAI developed. I’ve only used GPT-2 and I don’t have in front of me… let me see if I do have numbers because this is kind of helpful. OK GPT-2 is trained on like a 40 gigabyte data set called the “web texts,” and the way they put that data set together, OpenAI crawled the internet. I think it was mostly Reddit. And anything on Reddit that got three karma or more. They followed that link. They scraped the text and that became “web text,” and that’s the corpus that they use to train GPT-2. Again, this is 40 gigabytes of data. I think it has 1.5 billion parameters. Then later, they released GPT-3, which is orders of magnitude bigger. It’s 100 times larger. 175 billion parameters. It shares the same architecture as GPT-2, but now there’s more data sets. So they’re using that “web text.” I don’t know what these data sets have, but one is called Books I, Books II, Wikipedia and Common Crawl. And the parameters there are 175 billion and the training data set size is 570 gigabytes. And then recently I learned that China took GPT-3 and just blew it up. So they have a model now called Wu Dao. And it has 1.75 trillion parameters. The training dataset size is almost five terabytes. It’s trained on a lot of Chinese. There’s still some English, but it’s like they just dumped in…

@stevejobs_666 #GPT3 ♬ original sound – Steve Jobs

So again, here’s China, who I always say that the US wishes they could be China, right? It’s like a wet dream. If they could just be this awful, you know? They have to do it in a more covert way. Like, “No, we’re not surveilling you. It’s fine. Look, you got to have your persona and make your narrative and we don’t stop you. Aren’t we awesome? Everyone has a voice. Yay, America.” And China does it a different way. So they have a lot of data and then they can make the most powerful and the craziest… this is just wild to me. And this happened really fast because GPT-3, that was released last year? And now this new thing.

So again, I lost the question once again. But I wanted to give context for how fast this is scaling.

DB [00:36:45]

Can I refine that question really quick? Because that’s actually the train of thought I was going down. So you’ve used this idea of small data in your work. You’ve also used these larger training, GPT-2, and we had among ourselves been talking about the kind of homogenized aesthetic of these low-barrier platforms to get into machine learning, like Runway or I think there is one called Playform. Maybe you can elaborate as to your experience using both types of these data sets?

EM [00:37:30]

Yeah, yeah, for sure. OK, that’s helpful. I think laying this out now I have a clear answer for you. So in my experimentation, one of the things I did when I was working on my Processing fellowship is I’m not using any of the fancy stuff. I just use like a Char-rnn thing. And I had some text from Audre Lorde that I had found online, but none of it was really prepped for this stuff. So I had to clean all that text. And this is part of, going back to that human endeavor, all this stuff that they want to make invisible. There’s a lot of labor, a lot of human labor. And one of the articles you all sent that I didn’t read about had to do with Mechanical Turk and all this right? I was like,” Oh yes, this is such a huge part of what it takes to even create any of this stuff.” And then there are people making decisions about how to tag and classify data. And in the case of text, you have to clean it to make it [work] well for the language stuff.

For example, for Queer AI, Ben did all of this labor, set it up as conversational pairs because that was like a dumber AI. So it was the only way. You couldn’t just feed it troves of prose, the way you can with these large transformer models. You have to structure it a very specific way. And if you want to have a conversation that needs to learn from explicit conversational pairs. So that was one thing. And then when I was doing the Audre Lorde one, I literally went through, opened my text editor and I manually went through. They were very, very small, two megabyte, three megabyte. But that’s still a lot of text, you know!? And it was just hours. I just had music on and I was just cleaning, cleaning, cleaning. I got really fast at “Find and Replace,” you know? And and like, “Oh, let’s remove these spaces. Why are there these weird characters?” Because if there are weird characters, then the AI was going to learn about, you know? So you got to just clean, clean, clean. Take all that stuff out.

Finally, I had this thing, I turned it into a bot, and it was fun because it was the first one that I ever made, but still very incoherent. Very clunky. Very interesting to have the conversation and experiment. And because I was familiar with the text I knew, that’s another piece to this. The way that even with Queer AI, that became how to get something back from it that felt enjoyable or interesting was an art form in itself. And you have to become somewhat familiar with how this thing was made and also what’s in it to be able to then give it certain prompts so that it’s going to give you back something that isn’t just goobly-gop technobabble. Which is still happening.

View this post on Instagram

TN [00:40:34]

Yeah. Can I ask you a question about that? Earlier I heard you two talking about these things as reflections or mirrors. Can you talk a little bit about what are things you’ve gotten out of Audre Lorde Bot? I was looking earlier at those rad, weird, queer visual videos. What does that tell you? Is there something that you see about how humans experience queerness that is maybe new through having it fed through these giant infrastructures or these small infrastructures?

EM [00:41:09]

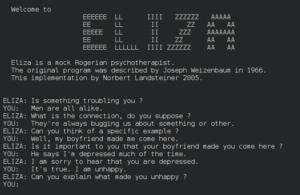

Oh gosh, how to answer that or how to begin to answer that? There’s a few things. When we started Queer AI at that time I was looking a lot like this conversational chatbots and learning the history of the bots, all of that. Are you familiar with Eliza? That’s the famous one. Some psychologists made this bot and it was not smart at all. It has canned responses. You ask it a question and it kind of branches off and gives you already a stock answer for things. There’s a lot of psychology that went into the bot. And I think there is something about, I don’t know if it was so much of a debate, but people talk about the failure or success of the bot. There is something about the success being, “oh, we tricked people into thinking that this thing was intelligent,” right? Whether or not that’s a success, I don’t know. And then the creator of the bot, I forget their name, didn’t like the fact that people wanted to be alone with this thing to be able to ask them these very deep, intimate, personal…the bot became like a confessional. Like you’re talking to the therapist, God, who knows what. But for me what was interesting is this need that human beings have to be seen and to be mirrored by whatever that is.

RP [00:42:55]

I’m sorry. Can you say that again? You cut off a little bit.

EM [00:43:00]

Oh, that I think what became really interesting for me is that just realizing that there’s this need that human beings have to be seen, to be mirrored. Whether or not that’s by an intelligence, an artificial bot, whatever that is, that’s inherent. And that’s what became really interesting to me. So once we had that Queer AI bot, I was having similar conversations where I wasn’t too familiar with many of the texts that went into it. Again, Ben prepped that. And to go back to what was readily available, a lot of those texts that went into that initial corpus are mostly written by gay-cis man or lesbians from a certain queer theater from the eighties. There’s a little before and after and there’s a lot during the AIDS crisis. So as I was chatting with this bot, I’m learning things like if I tried to sext, there was this stuff that would come back was very anxious. There is this tone that this bot was not having fun. There was a lot of “no, no, no, stop, stop, stop. We’re going to die.” And I was like, “What is going on?” And of course this thing, just like, again, the AIDS crisis, there’s just so much there that feeds into an anxiety that is what was coming through. So then learning about that, I would then ask questions to the bot kind of going along with that, asking things like, “Do you think you’ve inherited trauma from your data mother?” Things like that, just to just keep feeding it and having this long conversation about what is intergenerational trauma and how do we pass this along in these data sets, and how does this get replicated across these technologies? And now it’s a feedback loop. Because now I’m engaging with this technology. And is this retraumatizing something for me? How do I produce something else? So that’s part of what I was discovering.

But to answer, a little bit more of the question about the queering. I can’t really speak for Ben cause Ben has their own ideas, but I think for both of us, there was something that was already inherently queer about the machine learning process, because especially in that moment it’s something, now, that is trying to be refined, right? So it’s like, “Oh, so we can have better output. Or more predictable whatever.” And I don’t want that. No, I like this mess. This is interesting. This is already like what is to me a very rich, for something that we’re going to make… We’re going to fuck it up. And on purpose. I want to queer this thing further to see where that can go and what that reveals. Again, good or bad.

And all the stuff with the hidden layers and this idea of, they talk about AI being a “black box.” I think with the neural nets there is, you know, there is the stuff that happens in the middle where you can’t… I think what it comes down to is you can’t audit something. Once you get the output, there’s no way to go back through all of that magic in the middle, which I just call the layers of alchemy that gave you that output to say, “where did this…? How does this get to here?” We don’t know. So in a sense, there is this mysterious, yeah, black box. But on the whole, it’s not… It’s not all mystery. We do know what happens. Back to the whole human labor side, you need a lot of people to comb through data, to prep data to make it ready for the learning or the training.

DB [00:47:34]

As we were reading through some stuff, I was using a really rudimentary text-to-image AI, machine learning process [GAN] that I found to translate some little clips of some of the readings that we were doing. And I was showing it to the group earlier and we have been talking about the possibility or this general anxiety, which is present in all of the survey questions that we had sourced. This general anxiety of machines replacing or machines doing harm to, or in terms of art, machines doing art better, producing or reproducing images and creating work that’s just by default more engaging maybe? But I’m always drawn to the outputs that are wrong. And this rudimentary machine learning program that I found was very wrong, and it almost had no rhyme or reason to why it was spitting out anything. And I think there’s no way to refine any of those keywords in a way that makes any sort of sense to the imagery that’s coming up. So I’m wondering with this new line, the Unsupervised Pleasures that you’re working on, those video pieces, how you find that process of teasing out imagery or movement or just the general aesthetic by refining keywords? And what kind of keywords are you using, especially when you’re drawing from this corpus that you had assembled?

The Moon from Emily Martinez on Vimeo.

The moon is the mother of all the gods.

EM [00:49:27]

Oh my god. That’s a good question. And one where I really want to make a workshop for this because I think that it is a good entry point for people to start using this stuff. Right now I’m using this Google CoLab notebook. Basically, you could run code in the cloud and use Google’s cloud computing, all of their fast GPUs. I have an old MacBook, there’s no way I could run this stuff. And those notebooks are nice because you don’t have to be a coder. I’m using one that somebody made, you just copy it. It sets up code in these blocks, you run through the cells and you’re basically pressing play to execute all the different cells or load your libraries.

There’s one I’ve been using with VQGAN+Clip, which are these two libraries that have been combined. Clip is a library that OpenAI made, and I think it’s just text and image pairs. And I don’t know exactly right now, like I can’t spit out numbers. And then VQGAN is the image-generating library. And OpenAI has this whole… Do you all know the backstory of how all this came together? Because OpenAI has their own version of this called Dall-e, (like Wall-E and Dali…) whatever.

They’re so clever. So they tease this thing, which is you type in text that spits out an image. And they teased it, and then they took it away because that’s what they do. One of the libraries, Clip, they had publicly released, which is again the image and language pair library. But then that image generator one, that’s the part that’s their secret sauce, whatever. They did not release their thing. But the internet went at it and they’re like, we’re going to make our own. So they took Clip and they took VQGAN and they were like, ”Here you go, world. We just made a Google CoLab notebook. You can make your own type and text and generate image thing. We don’t need OpenAI anymore. This is what’s happening.”

I think this happened in July, where suddenly there is all this AI art that was being turned into NFTs. There’s all these Twitters with people basically making memes. Like they’re just typing in meme text and then generating images of whatever from this VQGAN+Clip. But I’ve been using it and taking text that was generated by the Queer AI text corpus and then feeding that into VQGAN+Clip and then having that generate images.

The things that you can do to try to make it, because when you just put in your own plain text, it depends. It likes nouns because it is easy, right? If you tell it “a guitar with a yellow table and five chairs and some shoes,” it’s going to somehow put that together. But when something’s a little bit less literal. Then what gets generated is pretty random. So there are different ways that you can try to steer what the output is. One way is to use modifier keywords. So I think part of that Clip library, it learned a lot of things. It knows what if you say something like, “render like Maya” or “render like cinema4D”. Or there’s all these ways that you can give it a style or image quality. You can use the Wikipedia database and make this in the style of X painter. And then the output is going to start to take on these different qualities. I’ve been using keywords like “hyperreal,” and there’s certain ones where I wanted to heighten the reality part and then using a lot of textural things. So I like to give it things like “furry,” ”lace,” “latex,” different colors. If you use words like “queer” or “gay” it likes to put rainbows on everything. So that’s interesting (laughs). And then another way that you can give the image more structure because the initial image just starts with this weird…like it’s referred to as the “latent space”, it’s just this gray blob where there might be something that looks like some pixels or like slightly different colors. But it just looks like a weird gray blah, bland background. But you can give it an initial image, and it’ll start to use that as the thing it generates from. So the most recent ones I made, I went through a lot of like images of bodies in embrace or erotic poses, some stuff from porn, but things where there were bodies together and using that to base off of.

The thing that happened in all of it, but especially with some of these is the results are so abject. The bodies do not retain any integrity as bodies, faces just mash up, they just smash the nose and an ear. And something looks like it’s the snout of an animal. This this thing doesn’t know what it’s making. Where again, back to like this idea of intelligence. And then also the way that our brains are wired to try to recognize patterns and things. And even if it was better, whatever that means, but if an eye is just slightly off, that’s already going to read as, “whoa, that’s that’s just wrong.” And this is worlds wrong. Because I’ve been running it to do maybe 1000 frames. And the longer it runs, it plateaus at a point. That’s kind of the aesthetic that maybe DB you were, like, a lot of this stuff starts to look the same. You can tell, “Oh yeah, this came from this AI algorithm because they all can have the same flavor. And so these feel like they start to jiggle in place a little bit because they’re not really doing much, but for maybe the first like 500 frames or so is where you really get to see where it’s moving. And with a lot of the stuff that started out with bodies embracing or bodies that were clearly bodies, I don’t know how this thing did not learn about bodies, but the bodies start to break down. The heads become decapitated. I remember one that was two women. I don’t remember exactly what they were doing, but it was an erotic image. And by the end of it, it looked like decapitated heads on the floor and then there was this ominous man in the background. There’s just a way that it deteriorates into violence and there’s abject disembodied mess. And those for me become interesting as studies. But I’m looking at them and being like, “Wow, I love this.” I’m looking at this and I’m like, “Whoa, OK, what’s going on here?” I am also trying to make things that feel I want to love them in a genuine way, but that seems to be more challenging. I think the waiting for the image to emerge, it’s something that I’m experiencing that reminds me of…

There’s something addictive about it, and I’m like, “Oh, this is like rubbing a scratch off. Am I going to win today?” What’s going to come out? And then there’s also something about it that takes me back to TV, like cable back in the day, trying to look at the scrambled channels. And I’m trying to watch those dirty channels, and can I see something? Is something going to come out? And it feels that way. That’s been my experience with playing with some of it.

EM [00:58:47]

And Runway. I’ve used Runway. It’s fun to learn because it’s easy. Again, you don’t need to learn how to code. The UI is very visual. I would describe it as the Photoshop for AI because, again, you don’t have to be a coder. You go in there, but you still have to prep like you’re trying to do an image-generating thing. I recently took an online course with School of Machines and Make Believe, and it was all in generating images and video and we used …what is it called? Totally forgetting the name of the model for like image generation that’s popular right now that people are using to make all the morphing faces and everything morphs in and out of whatever it is.

DB [00:59:48]

I know stylistically, but I don’t know the name of it.

EM [00:59:49]

I don’t know that it’ll come back to me, probably after this is over. Just add a footnote somewhere. But using that one it took so long to prep the data set. Because the images have to be 1024 x 1024.

You have to clean them in a way so that there are consistent and recognizable features that the thing is going to learn from. Like if the image is too diverse, you end up with something that generates a lot of… everything starts to look like earth tones. Honestly, everything starts to look kind of brown, and it’s something that people were saying is like, “Oh, you overtrained the model” which I think just means, “oh, you gave it too much and it’s too diverse and too all over the place, so it doesn’t know what to do with anything.” So everything turns into a brown soup. So there’s a lot of work that goes into the prepping and then running the stuff if you’re getting super into it, it gets really expensive.

It’s still not low enough barrier to entry. I think the CoLab might be a little bit more affordable because it’s doing the $9 a month plan, and that seems like plenty. Where with Runway you can spend way more than that the more you do. Because they’re charging you per time you train, or every time you try to download some images. And then there’s a monthly thing. I don’t even know how their pricing system works right now. But it is a bit of a fun one just to poke around and see what’s fair and try out some of the different models.

There’s one on there. I forget what it’s called, but it’s one of the image classifier ones and I gave it images of vulvas. And it doesn’t know what those are, of course. But it does try to label them. It draws rectangles around things and then just puts labels. And it was a lot of parts of men. “A man’s hat, a man’s tie, a man’s mustache, a man’s…”, And then a lot of animal parts as well, an elephant’s trunk or the tail of a cat. A lot of flowers. A vase with flowers, petals of flowers. So that was an interesting experiment. But I didn’t publicly put that anywhere because of consent for the images I use. The images are publicly available online, but these are somebody else’s images. I felt a little bit more protective of that. But that was more for my own learning and my own understanding of “What is this thing trained on?” And what if I give it this other information and data I know it doesn’t know anything about. What is it going to give me back? Oh yes, men. A lot of men. And animal parts. Right.

RP [01:03:10]

It makes me have questions around the way that we define intelligence in a capitalist society, given that there’s particular things that these things are putting out, right?

And of course, it could be putting out alternative things. But I was wondering, after having delved into these different technologies, how do you feel? What do you think? How do you define how intelligence is being rendered within AI space?

EM [01:03:41]

Yeah, I mean, It’s not. Again, it’s so narrow. And when I think about intelligence in the broader sense, or the things that I value, at least, I don’t think they’re things I’m going to ever find through a digital technology, because that’s not where that intelligence lives. Whether or not we can communicate about the intelligence of this technology is one thing. But I think about different ways of knowing, through my body, through being physically present and in tune with how I’m breathing, the Earth literally under my feet when I stand under a tree, when I breathe with someone else, right? There’s all sorts of intelligence there that just, maybe I’m going too far out, but when AI being that super narrow thing about prediction and finding patterns in a data set, that’s definitely not the intelligence. That’s like one little sliver. But that’s not what I want to be trying to engender or nurture more. I think between when I’m not doing this computer work, what I’m mostly doing is the opposite. I’m away-away from all the tech.

find through a digital technology, because that’s not where that intelligence lives. Whether or not we can communicate about the intelligence of this technology is one thing. But I think about different ways of knowing, through my body, through being physically present and in tune with how I’m breathing, the Earth literally under my feet when I stand under a tree, when I breathe with someone else, right? There’s all sorts of intelligence there that just, maybe I’m going too far out, but when AI being that super narrow thing about prediction and finding patterns in a data set, that’s definitely not the intelligence. That’s like one little sliver. But that’s not what I want to be trying to engender or nurture more. I think between when I’m not doing this computer work, what I’m mostly doing is the opposite. I’m away-away from all the tech.

Gosh, last year I started, for health reasons, two years ago, I started seeing an acupuncturist and I started to get deep into Chinese medicine. I have all this health stuff where between that and Ayurveda I have ways of healing my own body. I started an active Qigong practice. So I do energy work, which for me is another form of intelligence that these systems are so far away from. Like so, so far away from. Those are really the ones that I’m like, we need more of this. Understanding what those systems are doing is. Gosh, I mean, building a bridge, I’m not sure. I think when I think about the systems, maybe the narrative part, and how it’s using data to try to tell us certain things based on this “data.” What I do think a lot about is the power of story. We need to make our own story. We meaning small communities, intentional communities. I don’t want someone telling me what I am based on all of the stuff that’s been collected through “the intelligence” and then being narrated through data points. That’s the biggest problem. So I think engaging with this as an artist is about getting into it, getting dirty with this stuff. But so that we can then maybe from it have these kinds of conversations and rescue the story that we want to tell if we’re working with this stuff. And if we’re not working with this stuff, again, it’s still the same question; what is the story we’re trying to tell? How do we protect bodies of knowledge that belong to particular communities and honor lineage. All of the things that I think the current capitalist Western, whatever, the big tech companies are not giving us. They don’t want to give us. That’s just that’s never going to come from there.

RP [01:07:45]

Right, it starts getting me going thinking about the possibility of an AI project that caters towards the knowledge and stories of people that are working behind those technologies. Because it seems like thinking about labor extraction and thinking about the people that are behind the scenes, I just started thinking about, OK, so we’re doing all of these things, but it’s supposed to make our lives easier. These are tools that are supposed to help us, but also feels very isolated to a specific kind of privileged community? I don’t know.

TN [01:08:40]

No, I think that totally makes sense. Through this whole conversation, I’ve been listening for these moments of resistance or potential strategies for resistance. Because I think the title of the episode sets up this dyad of acceleration and slowness. And it’s hard because I’m totally partial to the idea of slowness and pulling back from this thing. There’s also part of me, and I think we talked a bit about this in a previous episode, the idea of like cottage-core, Ted Kaczynski is also its own form of fantasy. So some degree of acceleration has to be engaged, whether that’s to redirect, or fuck it, let’s have a singularity and be done with this already. But I really appreciate one thing that Emily is talking to that I don’t think we’ve hit on yet, really at all, is a kind of like autonomy or smallness of own[ing] your data set and when Google comes knocking or whatever, no, you keep that up. And that’s tricky. I think that internal thing has its own problems, but that’s a real call for developing literacy around these things. So you even can. I think holding Wikipedia up as that example is great because I fucking love Wikipedia. But then the value of Wikipedia gets sucked up also by Google. So how can you resist those larger things? And that definitely starts with literacy insofar as it’s possible, you know?

DB [01:10:01]

I think maybe one of the things that scares people so much or that seems so alien to people is the fact that what you’re describing, the smallness of defined community or even your body itself, [it] has limitations. And this idea of an ever-hastening, ever-growing AI abstract mind that lives in a non-space and completely learns at this exponential level and exists in this weird vacuum that seems really unable to be controlled, unable to be even learned by our, in comparison, “puny” brains. It just seems like something that’s so alien.

One of the readings was asking the question of whether or not AI could have mental illness and one of those… to live in that kind of abstract zone… I think schizophrenia is called like the journey inward, which is this constant spiral into yourself. It just feels like it stands in complete contrast to some of the other examples that you were giving, Emily, of the things that you do to get out of this space, like being fully bodied and be really present in something that is definitely human and definitively human. Thinking about some of the strategies, as we’re going through these readings and as we’re jumping from one topic to another, I’m trying to look for these subversive elements or communities that are actively engaging with this technology in a way that is supposedly breaking it or adding another layer that that goes against what it’s constructed to do. I know that I found very few examples like it with Web 3.0 technologies in particular, like cryptocurrency. So hopefully with our next conversation, as we move forward – we will be exploring cryptocurrency in the next talk that we have – maybe some of these ideas that we’ve tossed around in this conversation can apply to that one as well.

EM [01:12:18]

Can I add a final thought? I don’t know how long this is going to be. Back to that article, I glanced at it briefly, Can AI Have Mental Illness, and then this whole thing about the singularity, or this like general AI. We don’t even need to go that far. Like I started to have so many issues before even reading it, OK, the mental illness already!? I think a lot of the ways that we’re responding to this stuff that is going to be deemed “mental illness,” no, that’s that’s your body’s normal, healthy response to something is wrong with the environment. Get out. (laughs)

But to bring the AI back to how it already exists and it’s already affecting us, it doesn’t have to be some unknowable super-intelligence. It’s not ever going to be that, I don’t think. And it’s already here fucking with us deeply. And back to Google and YouTube, and I’m going to tie this back, hopefully, if I find my notes, to something Tabitha said in one of the other conversations you all had about the persistence of ideology and this idea of, we don’t have fixed waypoints anymore. And I forget the name of Putin’s assistants, the one that came from the postmodern theory. I read all about and was like, “Oh my god, okay.” This person that has this training in postmodern theory and theater and is now using it to create all of this content that’s going to fuel the radical right and the radical left. Nobody knows what’s real, you know? And we already saw the last election cycle and what’s been building probably for the last ten years and the right and this might tie into your blockchain conversation more, what are the values underlying all of this? And it’s like the right and 4chan and all those folks kind of taking over the internet and creating so much culture online. And then Facebook, YouTube, the algorithm is feeding everybody their stories and keeping everyone trapped in the vortex of… What do we have now? Q Anon? I haven’t followed the latest, but it’s already here, right? The way that these algorithms are manipulating people and then suggesting things to us and how deeply intentional that is because the foundation for so much of how this all works it’s all based on behavioral psychology. What is this guy’s name? James Bridle has this great book and I’ll share an article. You can watch YouTube videos if you Google on the Kids YouTube and how that whole thing works.

And that to me is such a great example of everything that we’re talking about now with how this is manipulating how our brains literally are wired. I think that came up in some of the surveys where people were talking about what this technology is… Something that was like, taking away from you or making life worse in some way? And somebody said something about not being able to access quiet space or to let their brain quiet down. And somebody else said something, I think, that the social media algorithms kind of mess with their neuro-circuitry. Yeah, they’re training us to be addicted. This is just triggering addiction patterns. They’re starting it really young with little babies on YouTube that are being fed by ads, algorithm that needs more. It just needs more to extract, more and more and more to keep doing its job. And it’s abusive, it’s abusive at its core. And that is what James Bridle points out. This is the big thing in bold italics, this is the system, and this is how it’s designed. That is then going back the way this has been used with Facebook to all the fake news.

I’ve noticed lately that my social media use has been effecting my mood/mental health. I’ve decided to take a break from certain ones, like Instagram and Twitter. Here are some helpful questions I asked myself when I decided to take a break! (: from selfcare

And now when I look at the language models, this is going to go other level because the stuff you can make with GPT-3 is like… We’re not going to know what’s what. I think that the battle for reality or how to even cohere around values or things that we align with, that becomes more and more difficult. And we don’t need the “super-intelligence” to do it, it’s already here with the current intelligence. Whatever, “narrow” intelligence and all the networked tech systems that are in place.

TN [01:18:07]

I think that’s it. I think you did it.

DB [ 01:18:10]

We’ve done it again! We’ve ended on that note!

TN [01:18:14]

I’ll call it there? Stop recording?

Featured Readings:

Lawrence Lek for New Mystics: posthuman creativity & weirded climates in a new text collaboration between writer, artist & AI

AQNB

Sept. 22 2021

https://www.aqnb.com/2021/09/22/lawrence-lek-for-new-mystics/

AI is an Ideology, Not a Technology

Jaron Lanier and E. Glen Weyl

Wired

Mar. 15 2020

https://12ft.io/proxy?ref=&q=https://www.wired.com/story/opinion-ai-is-an-ideology-not-a-technology/

Keynote Address: Jaron Lanier

Technology Policy Institute’s Aspen Forum

Aug. 19 2019

https://www.youtube.com/watch?v=fdohkulww0k

Microworkers are “Disempowered to a Degree Previously Unseen in Capitalist History”: An Interview with Phil Jones

Alex N. Press

Jacobin Magazine

Nov. 22 2021

https://www.jacobinmag.com/2021/11/microwork-amazon-mechanical-turk-machine-learning

Anatomy of an AI System: The Amazon Echo As An Anatomical Map of Human Labor, Data and Planetary Resources

Kate Crawford and Vladan Joler

AI Now Institute and Share Lab

Sept. 7 2018

https://anatomyof.ai/

Can Artificial Intelligences Suffer from Mental Illness? A Philosophical Matter to Consider

Hutan Ashrafian

Science and Engineering Ethics

Jun. 28 2016

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5364237/

What Can Machine Learning Teach Us About Ourselves?

Interview with Emily Martinez, ml5.js Fellow 2020

Johanna Hedva

Processing Foundation

Sept. 9 2020

https://medium.com/processing-foundation/what-can-machine-learning-teach-us-about-ourselves-65b268431890

Emily Martinez (they/she) is a 1st generation Cuban immigrant/ refugee, raised by Miami and living in Los Angeles since 2012. They are a new media artist and serial collaborator who believes in the tactical misuse of technology. Their most recent works explore new economies and queer technologies. Long-term projects explore collective trauma, diasporic and transnational identities, archetypal roles, and post-apocalyptic narratives. When Emily is not working, they are learning to love and doing their energy work.

Emily’s art and research has been published in Art in America, Media-N, Leonardo Journal (MIT Press), Temporary Art Review, and Filmmaker Magazine. Their work has been exhibited at international venues, including Drugo More (Rijeka, Croatia), Transmediale (Berlin, DE), Yerba Buena Center for the Arts (San Francisco), MoMA PS1 (New York), V2_Lab for the Unstable Media (Rotterdam, NL), The Luminary (St. Louis), The Institute of Network Cultures (Amsterdam, NL), and The Wrong Biennale.